What happens when someone uses AI to mimic a top US official and starts calling foreign leaders?

The State Department is scrambling to answer that exact question.

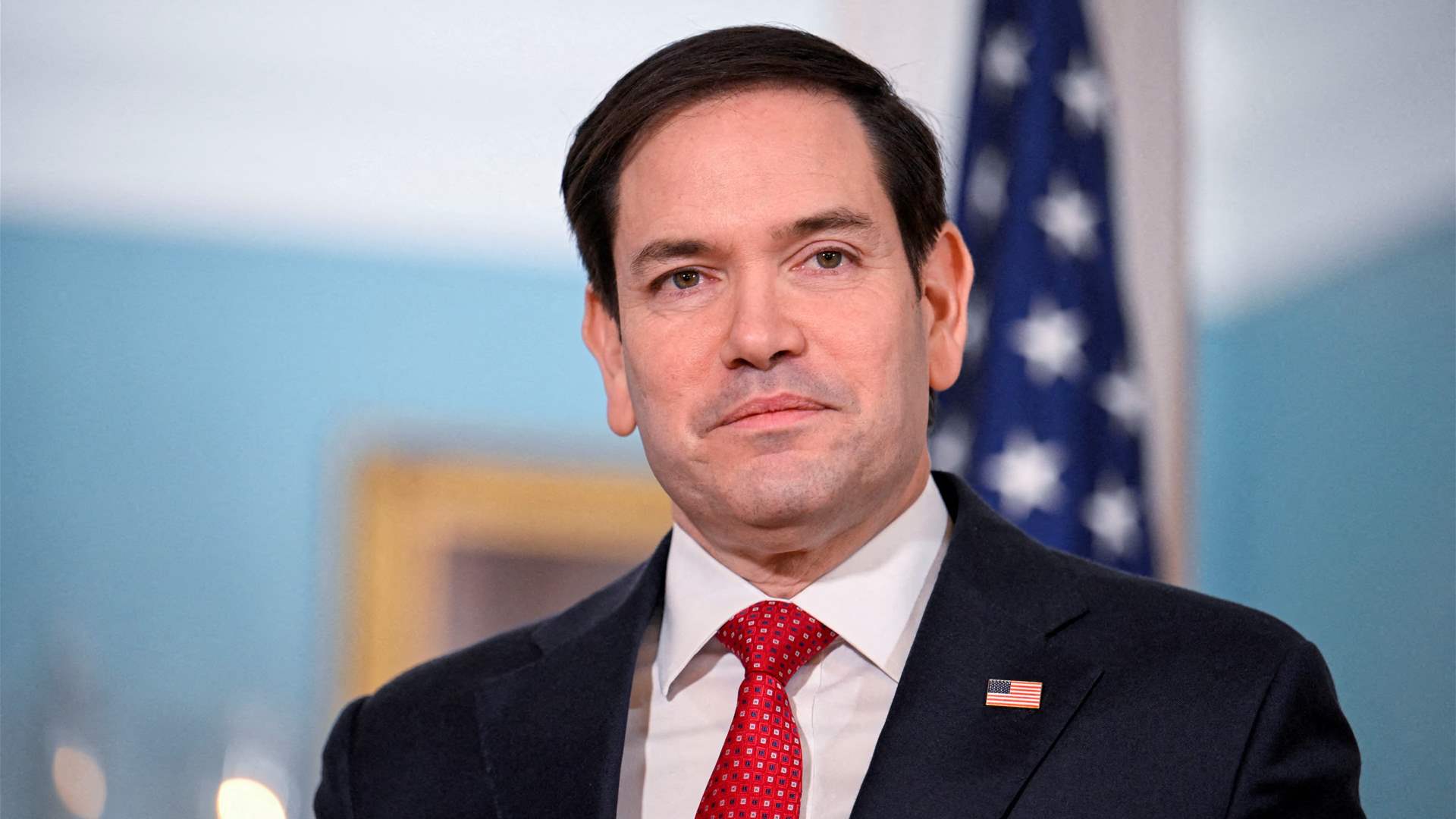

An unknown impersonator reportedly used artificial intelligence to create a convincing voice clone of Secretary of State Marco Rubio.

The impersonator then reached out to at least five high-profile individuals.

These included three foreign ministers—through the encrypted Signal messaging app.

According to a leaked diplomatic cable obtained by CBS News, the imposter left voicemails.

The imposter even invited targets to chat on Signal using a fake account labeled marco.rubio@state.gov.

The State Department acknowledged the breach, calling it a serious concern.

What’s The Threat?

Officials say there’s “no direct cyber threat” to government systems.

Still, if a foreign minister receives a voicemail from what sounds like the U.S. Secretary of State, wouldn’t they listen?

So far, the hoax seems to have flopped. The Associated Press cited a U.S. official saying the scam wasn’t “very sophisticated.”

But experts warn it’s a troubling sign of how easily AI can blur truth and fiction at the highest levels.

Secretary Rubio has remained silent—but in an age of deepfakes, silence might not always be golden.

The bigger question now: how do we protect trust when anyone’s voice can be copied with a few clicks?